Author:AXYZ DESIGN

Date:2023-09-20

EXPLORING THE IMPACT OF AI

IS AI CHANGING ARCHITECTURAL VISUALIZATION WORKFLOWS?

INTERVIEW WITH FRANCESCO TESTA OF PROMPT

Image made by Prompt studio, using Midjourney AI.

Every day, we witness remarkable advancements in the field of artificial intelligence, with increasingly impressive solutions that sometimes evoke admiration and, on occasion, raise concerns.

At AXYZ, as software developers, and you, in your role as artists, it is natural to wonder how we can make the most of these new technologies. In what ways could they enrich our daily tasks? Could these technologies contribute to enhancing our images and products? And ultimately, are they accessible to everyone?

To shed light on these questions, we've had the privilege of interviewing Francesco Testa, the founder and creative mind behind the Catalan studio Prompt. This creative studio is widely recognized for its ability to bring architectural projects to life through captivating visualizations, animations, and 360° virtual tour experiences.

Before we proceed, allow me to express our sincere gratitude for providing us with the valuable opportunity to delve into the projects and work carried out at your studio. Furthermore, we are delighted that you have agreed to participate in this brief interview.

- Last year, the predominant topic of conversation was the Metaverse, but a year later, it seems that Artificial Intelligence (AI) has taken center stage. Do you consider this to be a passing phenomenon or a genuine trend?

It's perfectly understandable to draw a comparison between the Metaverse and artificial intelligence, as both received significant attention and coverage in the media. However, we believe they are two diametrically opposed concepts, and that's where the longevity of one over the other lies, at least for now. The Metaverse is a concept that transforms our physical space into a digital one, creating the sense of bridging distances and attempting to humanize digital relationships through "optimistic" projections of ourselves, known as avatars. The need to create this new digital world was strongly fueled by the sense of isolation brought about by the COVID-19 pandemic, driven by significant economic interests seeking to replicate existing real-world "needs" in the digital realm. To the surprise of many, both individuals and companies, the predominant reaction after the pandemic was to return to human, physical contact and to once again enjoy the natural spaces that had been restricted during that period, rather than immersing themselves in a digital world observed through goggles. This marked the beginning of the decline of the Metaverse. Major companies had to absorb multimillion-dollar losses in Metaverse-related investments, and skepticism arose about the concept itself to the point where the leading promoter was compelled to silence it. Just at that moment, almost without warning, AI emerged and relegated the Metaverse to oblivion. AI emerged as a result of many years of research and, for reasons we cannot yet categorize as positive or negative, it became accessible to the general public even in its developmental phase, generating an enormous amount of data that led to exponential growth. A significant difference between AI and the Metaverse is that, despite some major companies attributing their success to it, the foundation of AI is open source, allowing anyone to use different AI models freely. This same characteristic also enables users to improve and create new models, providing a speed and freedom that the Metaverse never had.

In other words, if the Metaverse were to continue developing, it would likely rely on AI tools. In essence, the technological foundation of much of the new software will be created and supported by AI. We do not believe this is a passing fad; rather, it has come to stay. It can be likened to what the industrial revolution was to the world in its time. Currently, we have the opportunity to experience the technological revolution, which will likely change the way we live. In a very short time, significant advancements have been made in various fields such as medicine, physics, education, etc., generating numerous questions related to employment, economics, and ethics.

- Have you conducted specific tests using these new technologies?

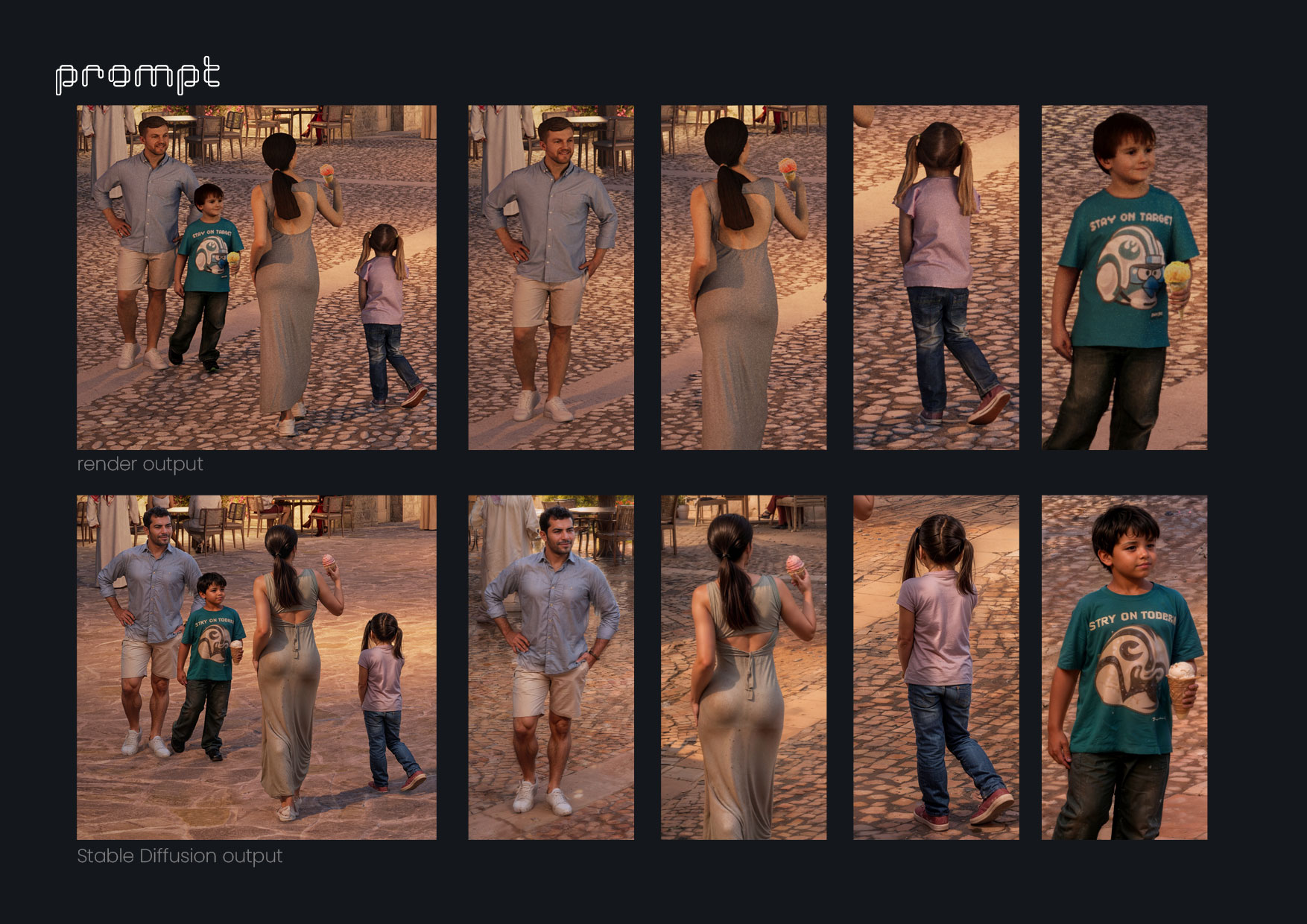

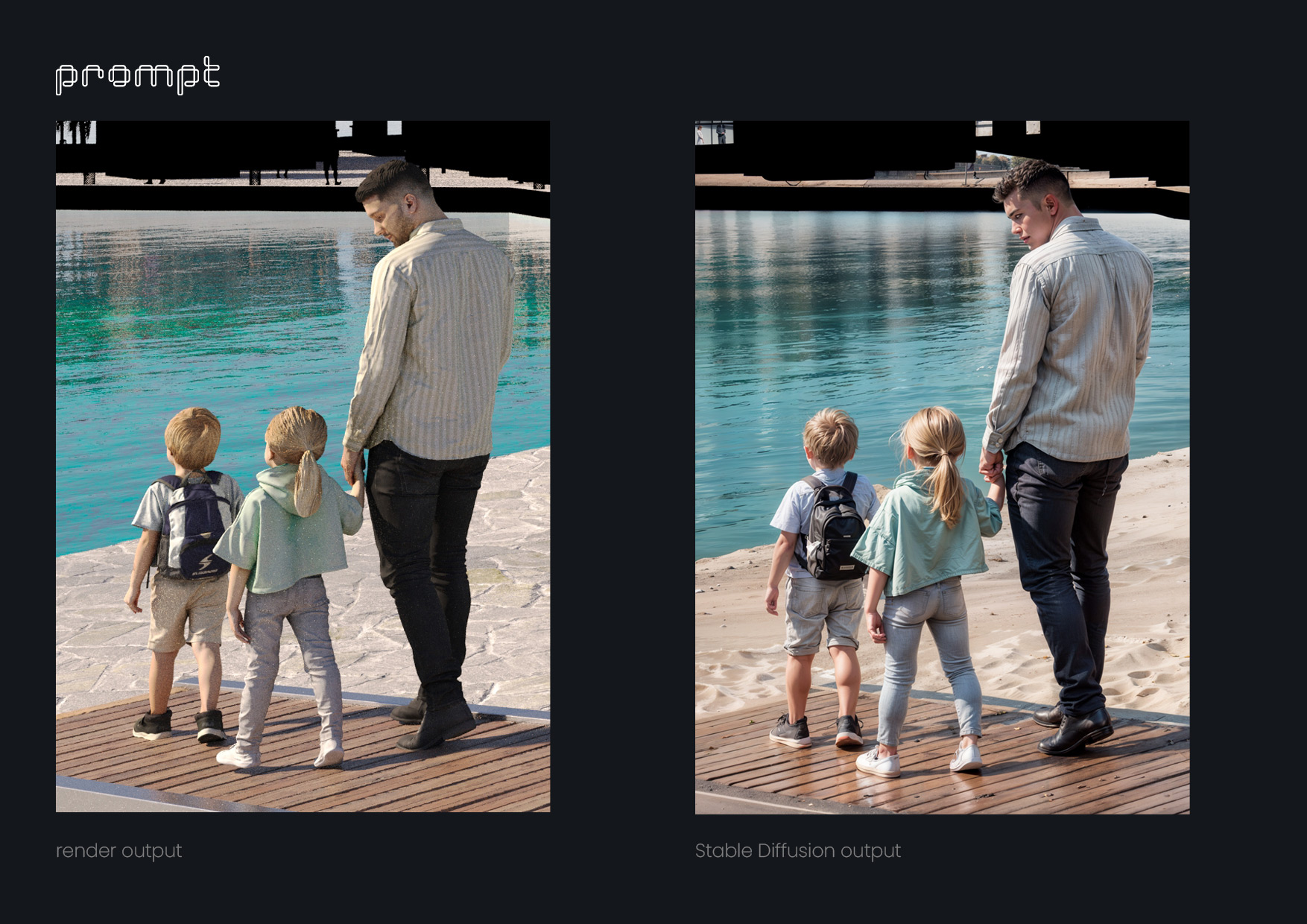

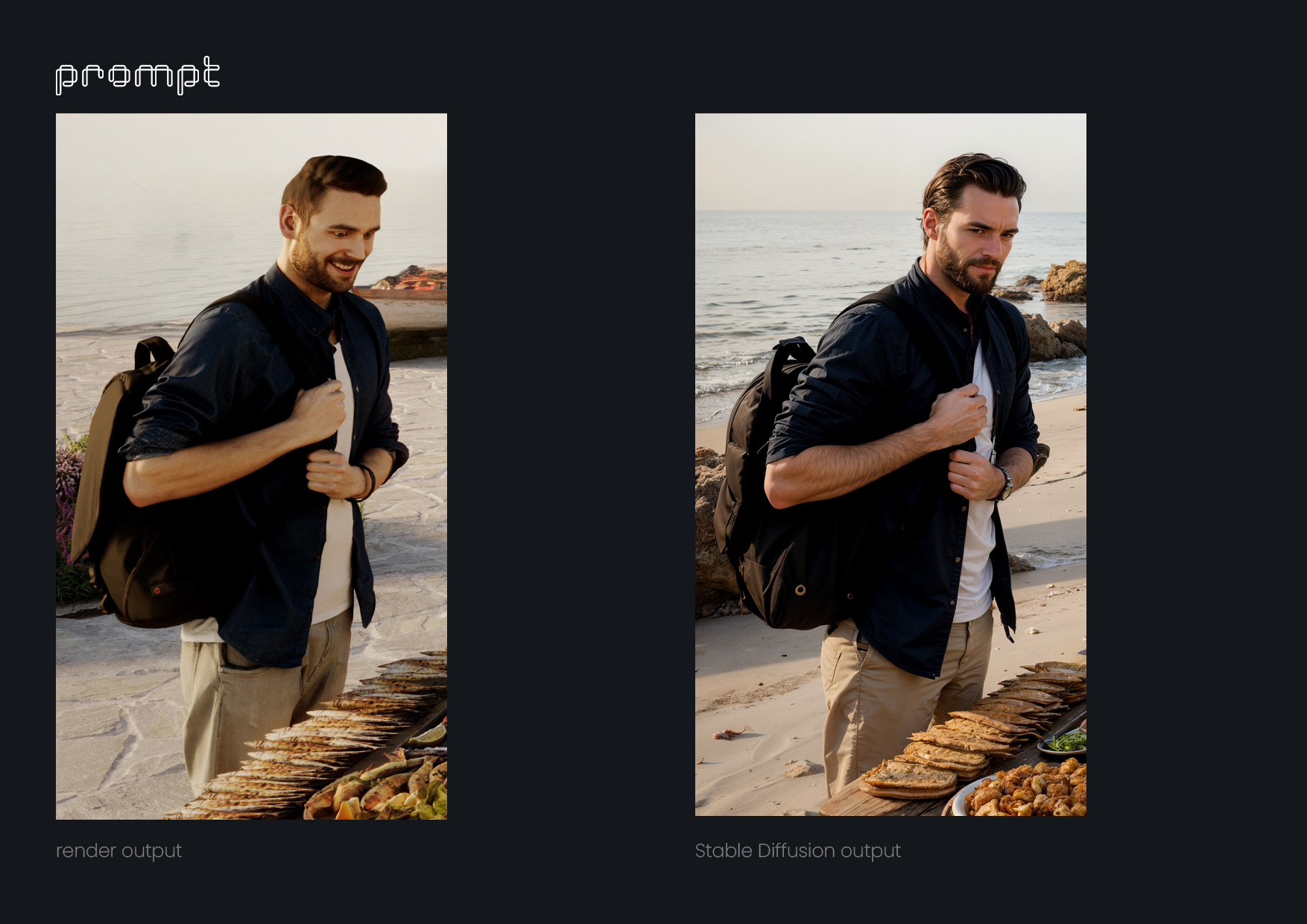

Yes, primarily driven by curiosity and the need to understand how these new tools could assist us in our work, we began using Dall-e almost immediately to create reconstructions of parts of cities in still images. This was a task that would have taken us much more time in Photoshop. Shortly afterward, Midjourney emerged, and we started using it to make initial visualizations of our clients' ideas. These visuals helped them guide us on the direction each image should take. We also began developing short introductions to animations for some projects in Midjourney using still images, which we later animated in After Effects. In parallel, we continued to learn how to use, through trial and error, the tool that is undoubtedly the most powerful for our type of work: Stable Diffusion. With it, we transitioned from animation experiments to making complex corrections in still images, improving textures, adding organic details to the images, and even creating even more realistic characters based on AXYZ anima® PRO / ALL 3D and 4D people assets. We also used voice generation models to create initial animation drafts with voiceovers, allowing us to refine them to perfection with the client and scriptwriter before hiring a professional voice actor.

- What kind of results have you obtained so far? Are these purely experimental results, or have you had the opportunity to apply them in specific projects or services?

Since these tools are continually evolving, there isn't yet a lot of information on how to use them effectively, so it does require a lot of trial and error. Thanks to the fact that one part of the studio was still involved in ongoing project production, another part was able to dedicate some time to research. We've now reached a point where we can share and implement this learning throughout the studio. However, we've been pleasantly surprised by how quickly we've been able to integrate these AI tools into real projects. We all know that in our industry, we often work with tight deadlines, and there's not much room for error, let alone experimentation. This is where the significance of these new tools lies, as they streamline processes and provide us with ideas and solutions that we might never have considered before, all at an impressive speed.

However, it hasn't been without its challenges. For instance, we created a video incorporating a new narrative approach for a project's introduction, but the client asked us to discard the entire intro that was generated using Midjourney images. They were hesitant to showcase such artificial-looking images, and in the end, we couldn't determine if it was a personal preference or simply a lack of understanding of the tool and its capabilities. We had to replace all the FullHD-quality images we had created with pixelated stock videos. That's why we understand that this is all part of a process, both in terms of teaching and learning, similar to how AutoCAD was initially met with resistance in the world of 2D architectural drawing. Many people were against computer-aided drawing at first, but now, more than 20 years later, it's one of the most popular drawing tools.

- Could you tell us about one of them?

Recently, one of our clients was developing a project on an island in the Red Sea and wanted the project's presentation video to be more than just architectural; they wanted it to have a strong narrative component. They wrote to us:

For the introduction of the video, we need to tell the story of pilgrims during the migration (hijrah 620 A.D.) who, while crossing the Red Sea in their characteristic Dhows (boats), shipwrecked on a virtually deserted island. The little wood they manage to salvage from the boats is used to burn and create smoke signals, but they are never rescued, so they decide to settle on the island, developing an architecture based on coral, seaweed, stucco, and pyramidal trunk-like forms. After a few years, the island's only spring dries up, and with it, the small civilization disappears, leaving only the remnants of this unique architecture. We need to create a cool transition to convey that 1400 years have passed, and we are in the present day, seeing our project proposal, which incorporates many features of the island's architecture.

All of this just one month before the deadline, producing 16 images and a 2-minute 3D animation of the project itself... A year ago, the response would have been, "It's impossible to do this, or it will cost proportionally as much as making 'Avatar: The Sense of Water.'" But fortunately, we were able to provide a viable solution. What we did first was create a specific chat in GPT-4 about the Hijrah period, asking about how the pilgrims could dress, their customs, what their dhows were like, what tools they used, and so on. We gathered a lot of information that would help us create an imaginary representation of that period. Then, we did a copy-paste of the instructions from Midjourney about the text prompts that should be used to generate images. We also gave GPT-4 several examples of prompts that had generated photorealistic images. With all this information, we started asking GPT-4 to write prompts for generating images for each part of the script provided by our client. This way, we created a narrative through images, literally generating hundreds of images before finding one that worked well, and gradually, the narrative came together. Afterward, we made some adjustments to the images with Photoshop Beta (also using its new AI tools), and in After Effects, we animated some elements and added a parallax effect. With music, sound, and a few other effects, we were able to achieve a strong narrative component, just as the client wanted.

- What is your vision for the future? How do you believe these tools are transforming or will transform the work of an artist, if they haven't already?

We firmly believe that artists should not feel threatened by this technology. Their role, not as craftsmen but as artists, should be to create beyond the tools, to discern which of the 100 images generated in minutes is the right one, the one that works with the others. Artists should have the ability to embrace quantity; quantity has become just another element of the creative process. Let's forget the debate between "quantity vs. quality." Let's lose the fear that our style belongs to us and that this technology steals it for others to use without meaning. We are offered an infinite world of styles that will create other styles, and let's remember that this is likely how we arrived at our style in the first place. We are specialists in image creation tools, and this is just one more tool. Let's become experts in this tool, and we won't be afraid of losing clients, because they won't be experts and will only scratch the surface of this tool. In a way, AI is democratizing a large part of the artistic world that was previously reserved for major productions with actors, sets, cameras, etc. Now, with a decent graphics card, imagination, and ingenuity, a single person can achieve quite good results. And this is just the ability to reproduce/imitate an existing form of language. The real challenge for artists is to find a completely genuine new language for AI. This should be our only concern. All of this is certainly transforming and will continue to transform the way we work. The important thing is to understand, from a professional perspective, the potential of what we can achieve with these tools. We see that powerful graphic AIs have already revolutionized how we present digital content. Just a glance at any of the social media platforms shows how digital content is increasingly being created with AI.

- Today, we already see photo editing tools applying AI solutions to specific applications of AI. Do you believe that in the future, this technology could be applied to rendering software? What revolutionary features or capabilities can you imagine this fusion could offer in rendering software?

Given the speed at which this technology has developed in the last year, we believe it's only a matter of time before it works effectively in rendering software. Remember, it's not just about the visual/graphic output that AI can generate but also its ability to learn and self-improve as it is used and receives feedback based on its performance. For example, a rendering engine could significantly improve rendering times if it performed the same render multiple times with small modifications in the settings and recorded the results to repeat them in similar situations. Moreover, there is a fundamental aspect of AI-generated images, which is that each generation is governed by a random parameter called a "seed," which can be fixed (to replicate results) or kept random. This random mode can generate ideas (for framing, lighting, textures, modeling) that the artist might never have thought of. If particular attention is paid, truly surprising results can be achieved. It wouldn't be unreasonable to think that at some point, rendering software could implement this functionality in service to the artist's creativity. Certainly, it will not only enhance rendering software but also revolutionize 3D modeling. Up until now, 3D modeling has been a time-consuming part of digital creation. Just imagine for a moment if 3D objects could be drawn using prompts. Especially in the case of architectural modeling, the values we work with are entirely geometric and mathematical, meaning they are measurable and precise. These values can likely be input through prompts into an AI platform. This would enable us to mechanize tedious repetitive processes. To give an example, it would be like having a virtual 3D drawing assistant at hand.

- We observe that most AI tools primarily function through text prompts. However, since you are graphic artists, wouldn't natural inputs be images, strokes, or mood boards? What is your perspective on this approach?

In reality, the vast majority of AI that generates images have integrated the capability to take visual inputs. In fact, for our work, it's crucial to have these extensions/options provided by different platforms. The most versatile and powerful of them all (though less user-friendly) is Stable Diffusion, to which several tremendously useful extensions can be added:

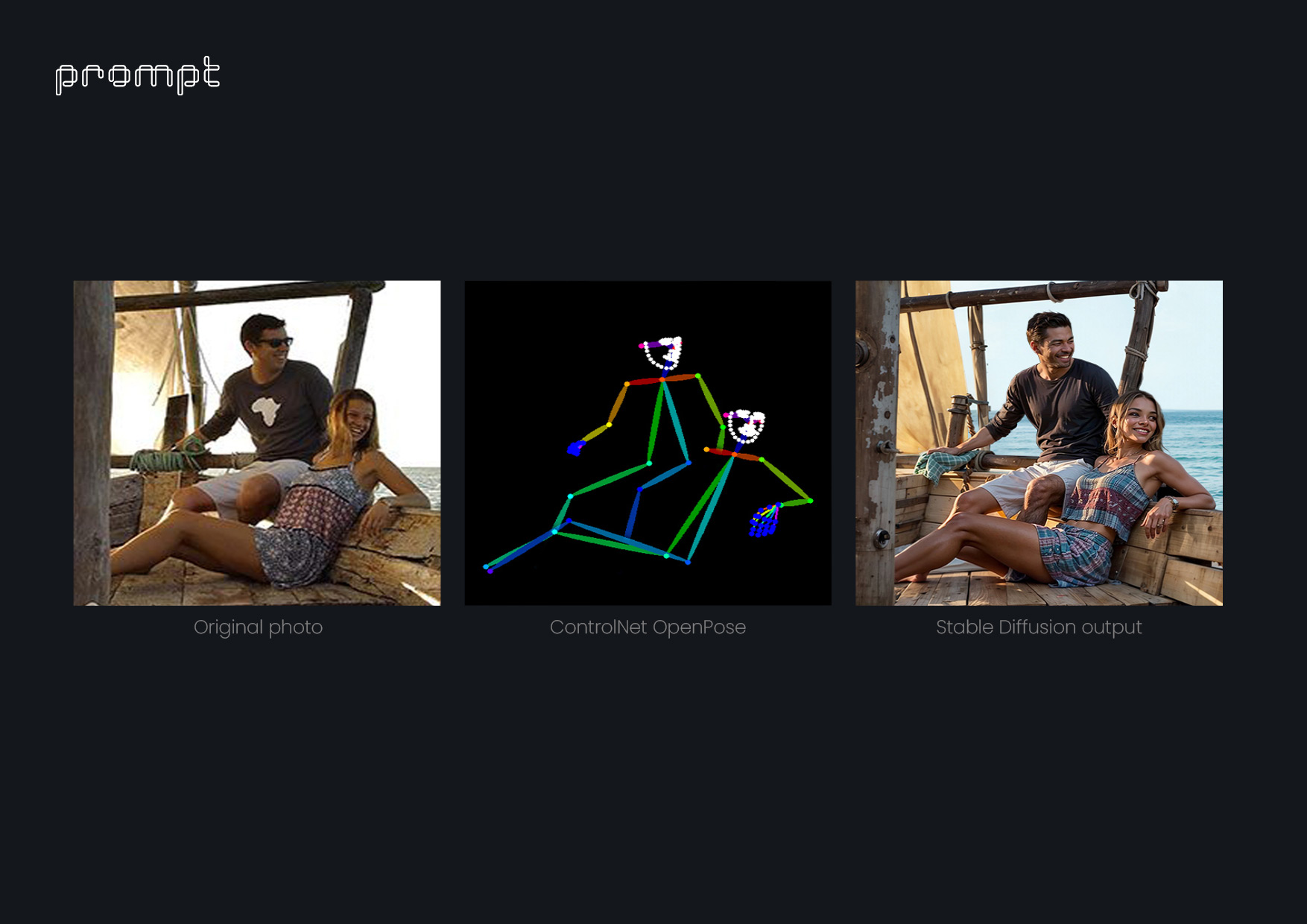

ControlNet:

It's an extension that analyzes an image provided by the user and has many different detection modes. Once the user's desired element is detected, it can be instructed through text prompts to modify only that selected part. For example:

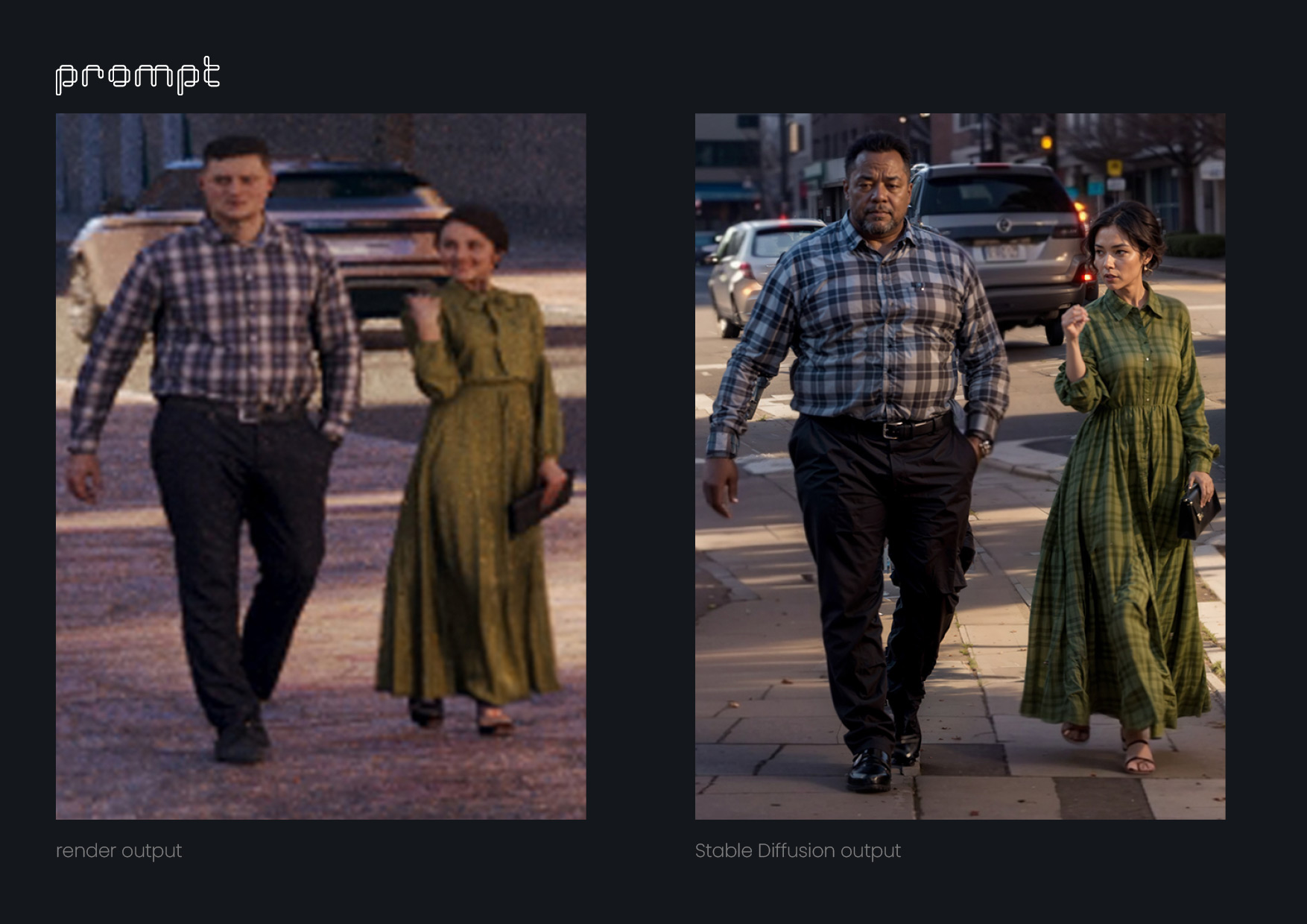

- "OpenPose mode" allows us to determine the position of human figures in images (body, hands, faces, etc.). With this mode, we can detect the position of an AXYZ anima® PRO / ALL 3D and 4D people characters already integrated into a scene. By maintaining the camera angle and lighting, we can create even more realistic variations of that same character, which can then be further composed in Photoshop.

- "Reference mode" is used to copy the style of one image and apply it to another.

- "Depth mode" is for detecting volumes in the space of an image and then reproducing them in another image.

- "Hed mode" detects small details that determine the contours of different elements in an image. This is one of the most powerful extensions and is constantly evolving thanks to the contributions of users.

SD Ultimate Upscaler:

This extension is used to scale, reconstruct, and add details to low-resolution images. We've all encountered situations where a client requests a very specific texture and sends us a 75x75px photo but wants that texture to cover 80% of a 4000 px image. Previously, we would have spent hours trying to replicate that texture. Now, thanks to this extension, we can use exactly the same texture the client desires without losing quality.

Inpaint:

It allows drawing on an image only what we want to modify with a text prompt.

Outpaint:

From the same image, it generates content beyond the boundaries of the original image frame. These are just some of the extensions available to users to create images based purely on visual inputs. What's wonderful about all of this is that the development of these tools, being open source, depends on the contributions of users and developers and not solely on a company motivated solely by economic interests.

- Looking ahead to the future, how do you envision a potential evolution of the work you are currently engaged in?

Presently, remarkable strides have been made in static image generation. However, the significant challenge lies in animation. Up to this point, the intrinsic operation of various AI systems involves generating one frame at a time, which is interpreted as independent. This can result in a certain instability or lack of consistency between frames, causing the entire image to appear somewhat "shaky" during playback. It's likely that this instability will be resolved over time. However, as mentioned earlier, the most intriguing prospect is the development of a visual language that doesn't merely mimic what we already do but instead embraces these visual inconsistencies and instabilities, viewing them not as errors but as virtues. Our near-future aspirations are rooted in the pursuit of this new mode of expression.

- In conclusion, I'd like to ask you, from your perspective as a user and an artist, how do you envision a future version of our flagship product, anima® PRO / ALL?

If we can allow ourselves to think beyond technical complexities, we could envision a future version of anima® PRO / ALL where it leverages its extensive database of human movements and visual inputs from individuals (ethnicities, clothing, situations) and interprets them using tools similar to OpenPose. This future anima® would allow users to input (or select from a limited list) a movement and a physical description of a character, and then it would generate a consistent 3D mesh to integrate into the scene. This would provide users with incredible creative freedom and make each project, whether static images or animations, truly unique. We understand that we are still far from developing something like this, but considering everything we've witnessed in just one year in the realm of AI, we wouldn't be surprised if it becomes achievable in the near future. While we are enthusiasts and promoters of AI development, we are also concerned about the potential misuse of these tools, uses that stray from the tool itself but are nonetheless dangerous. It's our responsibility to research and continue developing AI, but at the same time, we must dedicate time to understand the social implications it carries and be ready to adapt to a world where this technology plays a fundamental role. Many of the things we've discussed in this interview are based on our own experiences using AI in our work. It's possible that we may be mistaken in some of our reflections or that we've mentioned certain processes in a somewhat superficial manner. Therefore, we are completely open to having a conversation about these topics or addressing any questions that may arise.

SOME EXAMPLES OF AI-ENHANCED RENDERINGS

ABOUT THE ARTIST

The creative studio is an established Barcelona-based team with over a decade of international experience. They boast a diverse group of professionals from various backgrounds and cultures, providing a comprehensive perspective for creative processes and offering bespoke visual solutions. In today's highly visual global landscape, where images serve as the universal language for promoting ideas and projects, they specialize in delivering top-notch renders, animations, virtual tours, sales brochures, and websites. Their efficient workflow revolves around grasping the client's unique vision, adhering to deadlines, and presenting comprehensive, cost-effective options. Their primary services encompass renders, animations, virtual tours, sales brochures, and websites.

To get in touch with them, check out their contact information below:

https://www.promptcollective.com/contact